Clustering Union Server

Union Server can be scaled by creating a cluster of Union Server instances called nodes.

Rooms

When a cluster is available a room can be configured to have additional matching rooms created across the cluster. This type of room clustering is called MASTER-SLAVE. The node where the create room request originated creates the room with a role of MASTER. All other nodes in the cluster will automatically create a matching room with a role of SLAVE.

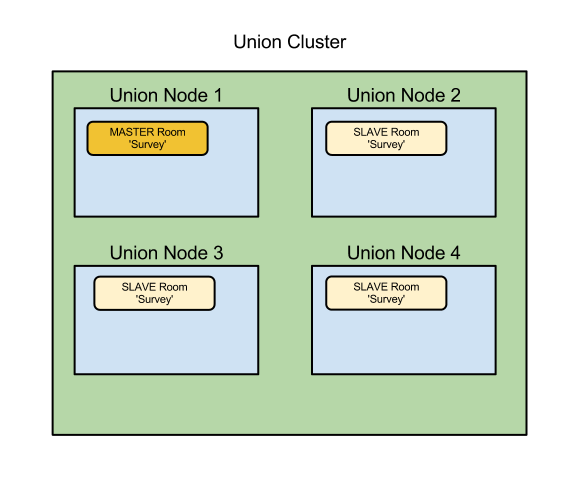

The following diagram shows how a 4 node cluster would look after creating a room 'Survey' on Union Node 1.

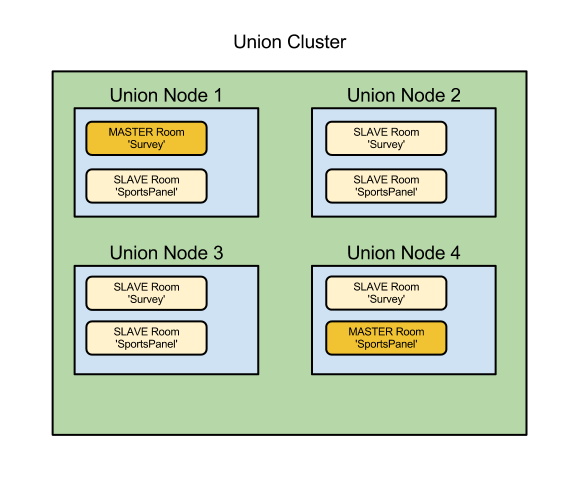

The following diagram shows how the cluster would look after creating another room 'ChatRoom' on Union Node 4.

Master and slave rooms communicate with each other by dispatching remote room events. If a remote room event is dispatched by a master room that event is automatically dispatched by each slave room. If a remote room event is dispatched by a slave room that event is automatically dispatched by the master room. Room modules attached to a clustered room can listen for the remote room events to take action when these events are dispatched. When a room module is initialized it can query the room to determine if the room is the master or a slave. It can use this information to branch the set of logic it will attach to the room. Master logic will act as the brain while slave logic will generally respond to cues from the master and send its own state for aggregation by the master.

For an example of how to create clustered rooms see the survey tutorial.

Servers

The server on each node can also use remote events to communicate with all other nodes in the cluster. If a server dispatches a remote server event that event is automatically dispatched by the server on all other nodes in the cluster.

Server Affinity

Server affinity can be used in Union to ensure a client connects to a specific Union node for a specified duration. This is useful in certain load balancing scenarios such as:

- Load balancing backed by round robin DNS with a short TTL or where clients are not expected to have operating system level DNS caching.

- Load balancing without built-in server affinity support.

When server affinity is active the u66 (SERVER_HELLO) will include a public address and a duration, in minutes, for which the address is valid. Clients will store this information locally and use it in place of the original address for the given duration.

For example, a client connects to the address pool.example.com. The u66 specifies server affinity to the address a.example.com for 60 minutes. All future client connection attempts to pool.example.com will instead use the address a.example.com for 60 minutes. If a connection attempt to a.example.com fails the client will go back to using the address pool.example.com.

Server affinity can be configured at startup with union.xml (see below) or programmatically with the net.user1.union.api.Cluster interface.

Cluster Configuration

Edit union.xml to add a <cluster> element directly under the <union> root. Under <cluster> define the gateway (i.e. the entry point for other server nodes to connect to the server), and nodes (i.e. server nodes the server should connect to) as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | <union> <cluster> <gateway> <ip>192.168.0.10</ip> <port>9200</port> </gateway> <affinity> <address>a.example.com</address> <duration>60</duration> </affinity> <nodes> <retry-interval>30</retry-interval> <node> <ip>192.168.0.11</ip> <port>9200</port> </node> <node> <ip>192.168.0.12</ip> <port>9200</port> </node> </nodes> </cluster> </union> |

This above configuration causes the following:

- the server starts listening on 192.168.0.10:9200 for connections from other nodes

- clients connecting to the server will be told to use server affinity through the public address a.example.com and the affinity should be valid for 60 minutes

- the server will attempt to connect to a node at 192.168.0.11:9200

- the server will attempt to connect to a node at 192.168.0.12:9200

- when initial connections to a node are not successful it will retry to connect every 30 seconds

There need only be 1 connection between each node. So in the above example the servers described in the nodes section do not need to initiate a connection to 192.168.0.10. Not all nodes in a cluster need be configured to connect to each other. For example you could configure a 10 node cluster so that each node only connects to a subset of the entire cluster.

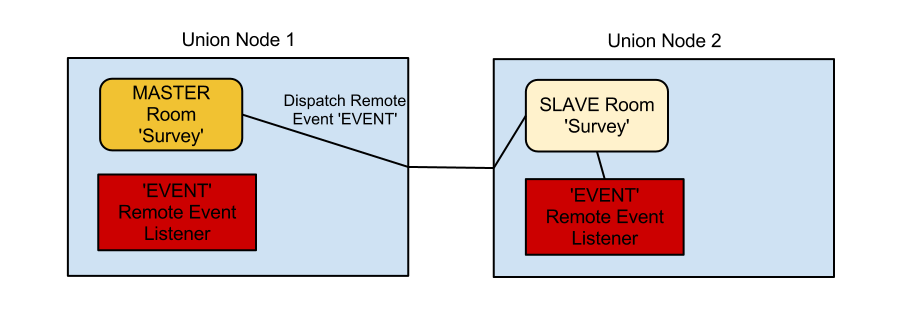

Understanding Remote Events

Remote events are different than normal events in that local listeners on the event producer don't receive the event. Only listeners on the matching remote object receive the event. For example, if a master room "Survey" dispatches a remote event only those listeners on the slave rooms for "Survey" receive that event. You can think of dispatching a remote event as allowing local objects to tell remote objects to dispatch events.

Both the master room and the slave room for the clustered room 'Survey' are listening for the remote event 'EVENT'. When the master room dispatches the remote event the local listener does not receive notification. The remote event is sent across the cluster to Union Node 2 and dispatched remotely by the slave room. The listener on Union Node 2 then receives that event. We are using the remote event to allow the master room to cause the slave room to dispatch events.